Introduction to Ring-Allreduce

Translated by Claude from the Chinese original.

Today I stumbled upon the ring-allreduce GPU communication algorithm out of curiosity. I originally wanted to read about it on Baidu Research’s page, but couldn’t find the relevant content on their site. After reading the comments in the baidu-allreduce code, I understood it. It’s actually a fairly simple algorithm to explain, so I’ll give a brief overview.

If you want implementation details, you can read the comments on GitHub directly—they’re very clear: https://github.com/baidu-research/baidu-allreduce/blob/master/collectives.cu#L156

- Unless otherwise noted, the images in this post are from a Zhihu answer, because I could not find the original Baidu Research article containing these diagrams.

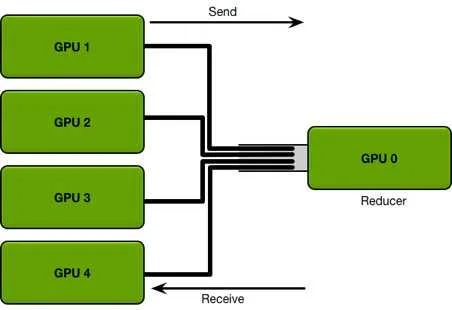

A major drawback of typical multi-GPU training is that one GPU needs to collect gradients from all other GPUs each time, then distribute the updated model back to all other GPUs. As shown below:

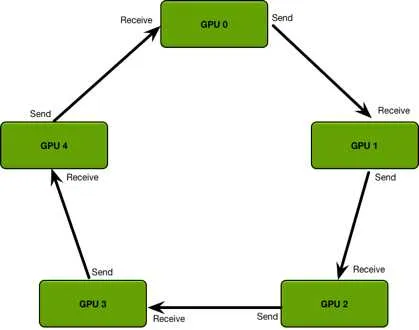

The biggest drawback of this model is that GPU 0’s communication time grows linearly with the number of GPUs. This is why ring-allreduce was developed, as shown below:

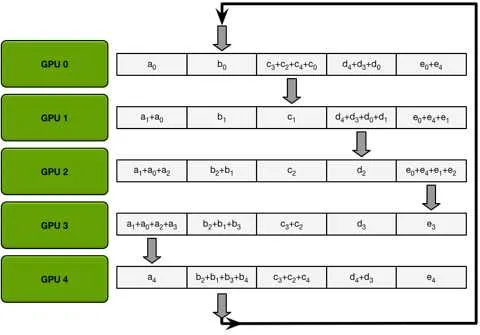

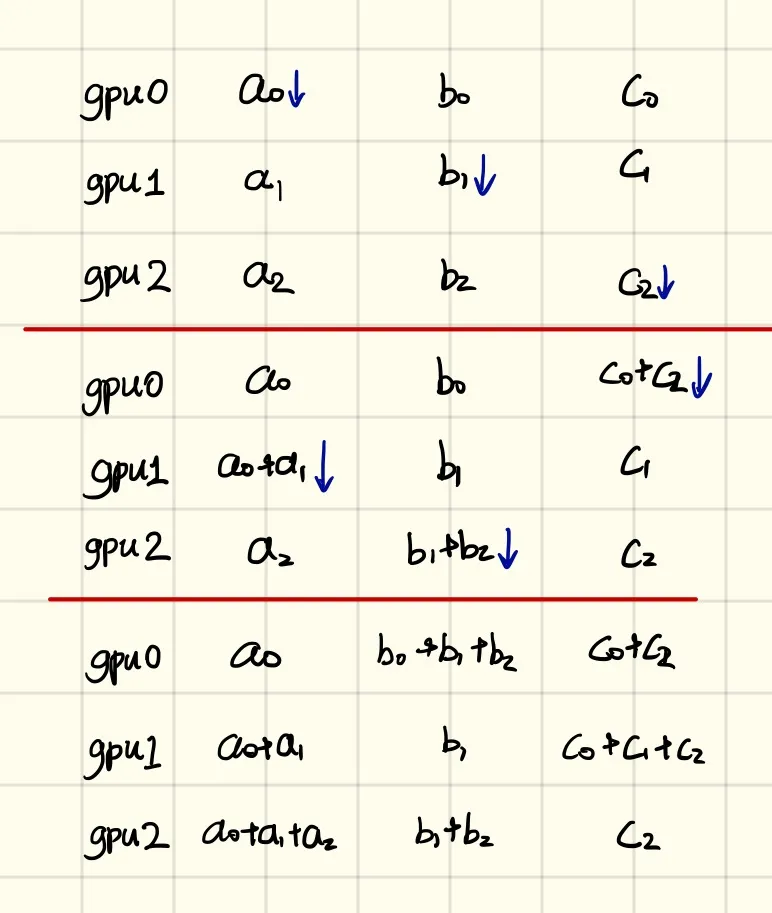

First step (reduce-scatter): We have n GPUs. We divide the data on each GPU into n equal chunks and assign each GPU its left and right neighbors (in the diagram, GPU 0’s left neighbor is GPU 4 and right neighbor is GPU 1; GPU 1’s left neighbor is GPU 0 and right neighbor is GPU 2, and so on). Then we perform n-1 rounds. In round i, GPU j sends its chunk (j - i) mod n to GPU j+1 and receives chunk (j - i - 1) mod n from GPU j-1, then performs a reduce operation on the received data. (All indices use modulo-n wrap-around, e.g., -1 mod n = n - 1.) The diagram below illustrates this:

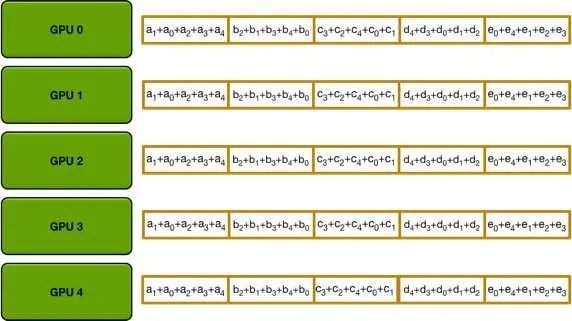

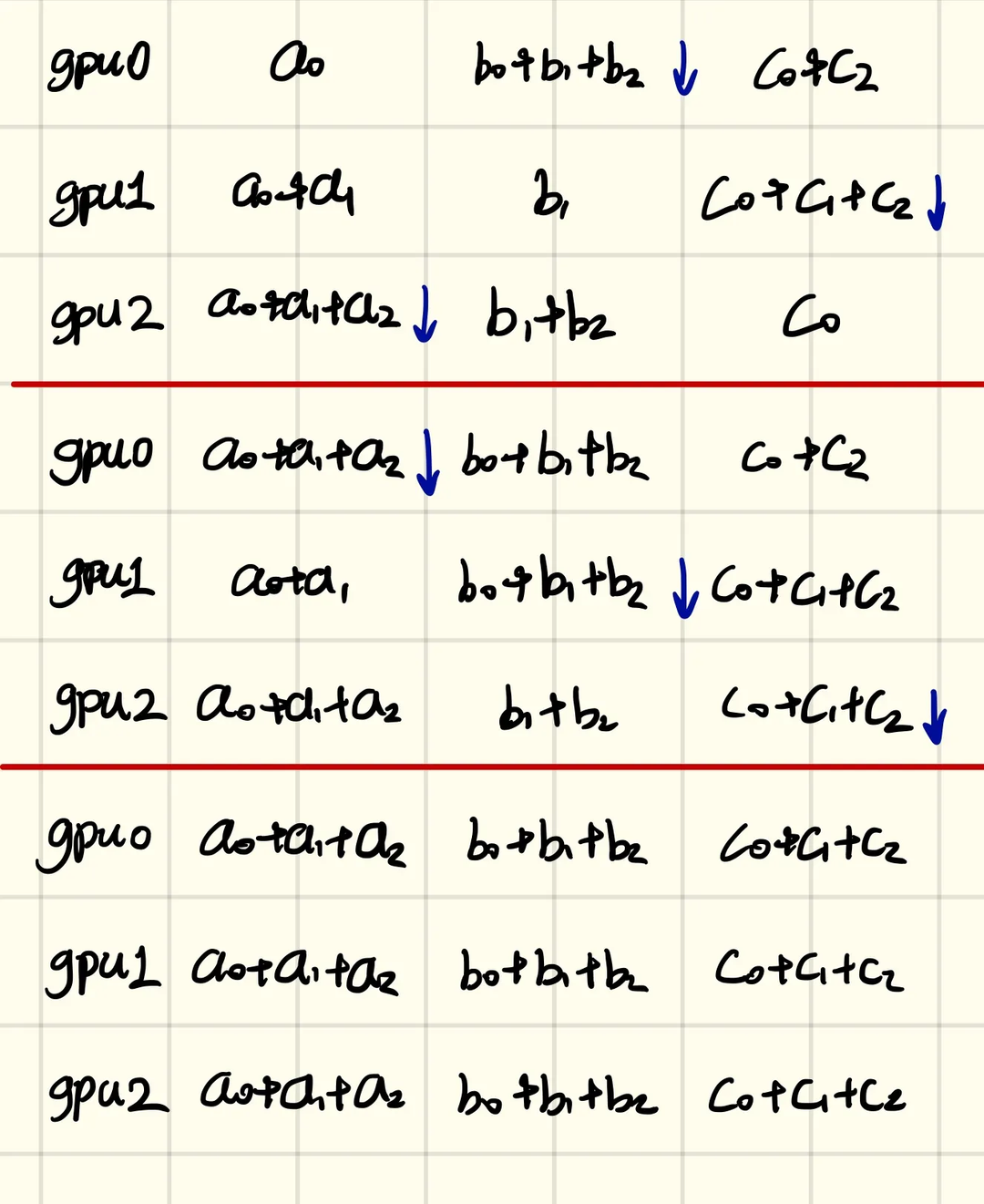

The second step, allgather, is straightforward: through n-1 rounds, each GPU forwards its reduced chunk to other GPUs. In round i, GPU j sends its (j - i - 1) mod n chunk to its right neighbor and receives the (j - i - 2) mod n chunk from its left neighbor. Unlike the first step, the received data does not need a reduce operation; it is copied directly into place.

Finally, the data on each GPU looks like this:

First, the reduce-scatter step:

Then the allgather step:

References: